The Problem

Services businesses that rely solely on static pricing models and traditional above-the-line marketing tactics are unable to maximise the revenue from their fixed inventory. Unlike businesses selling products, services inventory is perishable over time.

The Approach

Codex was engaged to develop an online booking platform for services businesses to optimise utilisation of expiring inventory via dynamic scheduling, sequencing, pricing and bundling. Codex managed the end-to-end lifecycle of the project from ideation to market.

12-week discovery

Resulting in a 98 page executive summary.

15-months of design, development & testing

Using agile project management to get an MVP to market.

We Built Aionic End-to-End

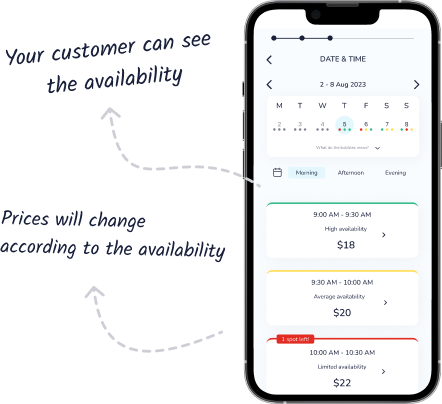

Seamless User Experience, Enhanced Business Performance

We combined user-friendly interfaces with powerful backend algorithms for a seamless user experience that boosts business performance.

Effortless Scalability with Modern Architecture

We utilised a serverless architecture, offering elastic scalability to meet growing customer demands without additional infrastructure costs.

Revolutionising Booking with AI-Powered Insights

Our platform harnesses advanced machine learning to predict demand trends, ensuring optimal pricing and enhanced revenue opportunities.

Serverless Case Study

This serverless infrastructure implementation showcases a scalable and cost-efficient architecture using AWS services. Here’s how each component contributes to cost efficiency.

Components of the Serverless Architecture

A AWS WAF and CloudFront offer a combined solution for security and content delivery, where WAF provides cost-effective protection against web threats, charging for the rules you deploy and the data you inspect, and CloudFront delivers your content globally with a pay-as-you-go model that charges for the data transfer and requests, optimising content delivery costs.

B Amazon Cognito and S3 Web Hosting together offer a robust foundation for web applications, with Cognito managing user authentication and identity services in a pay-per-user model, enhancing security without the need for significant upfront investment. Meanwhile, S3 provides cost-effective web hosting for static resources, charging only for storage used and data served, which makes scaling easy and economical as user base and data demand grow.

C AWS AppSync is a managed GraphQL service for developing applications. It simplifies application development by charging for the queries executed and the data transferred, removing the overhead of managing servers and reducing operational costs.

D AWS Lambda executes code in response to triggers without the need to manage servers, billing for the milliseconds of compute time used. This granularity in billing ensures that you're only paying for the compute time your applications actually consume.

E Amazon Aurora Serverless & DynamoDB both streamline database management with a pay-for-what-you-use pricing strategy; DynamoDB offers fast, scalable NoSQL database solutions with pricing based on storage and throughput, while Aurora Serverless adjusts relational database capacity automatically, ensuring efficient resource utilisation and cost savings for fluctuating workloads.

F Amazon CloudWatch and CloudWatch Alarms provides monitoring and observability services. It charges based on the volume of metrics and logs collected, and the number of alarms created, allowing you to monitor application and infrastructure health without overinvesting in monitoring services.

AI Case Study

This infrastructure implementation showcases an advanced Machine Learning Operations (MLOps) system, using AWS services. Here’s how each component contributes to an effective model deployment.

1 Data/Model Monitoring

2 Feature Store A centralised system for storing, sharing, and retrieving model features. Ensures consistent feature engineering across models and speeds up the development process.

3 Training Pipeline

4 Model Registry A centralised repository for managing models. It tracks versions, metadata, and enables controlled deployment and rollbacks of models, which is essential for model governance and lifecycle management.

5 Lambdas Serverless compute service to run code in response to events in model pipelines. Enables quick execution of functions without provisioning servers, allowing scalable, cost-effective, and event-driven model operations, from preprocessing to post-analysis.

6 Step Functions Orchestrating tool to design and execute workflows, integrating multiple services for managing pipelines. Enables visualising and automating sequential steps, error handling, and state management, streamlining model deployment and monitoring process.